by David | Jan 21, 2023 | Help Desk, Information Technology, PowerShell

Reading Time: 4 minutes

A few of my clients use something called LAPS. Laps change the local administrator password on a computer and then store the information inside Active Directory. Since I don’t dive deep into this client’s computers often, I needed something to quickly type the first letter of the computer in question to pull up the LAPS password. Basically, I needed a list of computer names from the command itself. This is fully possible with Dynamic Parameters. So, today we will be grabbing the LAPS password with PowerShell.

Where Does LAPS Password live?

Most companies that set up LAPS do so with Active Directory. By default, Active Directory saves the password into an attribute called “ms-Mcs-AdmPwd” and LAPS also stores the expiration date in “ms-Mcs-AdmPwdExpirationTime” Thus, all you have to do is call a get-adcomputer command and pull out the information.

Get-Adcomputer -filter {name -like $Computer} -properties name,ms-Mcs-AdmPwd,ms-Mcs-AdmPwdExpirationTime | select-object name,ms-Mcs-AdmPwd,ms-Mcs-AdmPwdExpirationTime

Now the “ms-Mcs-AdmPwdExpirationTime” is unique and needs to be parsed into something more readable. We can use the m method called [datetime] to do this.

Get-Adcomputer -filter {name -like $Computer} -properties name,ms-Mcs-AdmPwd,ms-Mcs-AdmPwdExpirationTime | select-object Name, @{l = "AdminPassword"; e = { $_."ms-Mcs-AdmPwd" } }, @{l = "AdminPasswordExpireTime"; e = { [datetime]::FromFileTime(($_."ms-Mcs-AdmPwdExpirationTime")) } }

There you have it, That’s how you get the LAPS password, But I want to take this one step further. I don’t know all the computer names. I want that information at my fingertips while I type out the command. So, I want to type something like Get-LAPS -ComputerName <here populate a name where I can tab> and bamn, it gives it to me when I hit enter. That’s where we will dive into dynamic parameters next.

Adding Dynamic Computer Name Parameters

In a previous article, we went over how to make a dynamic parameter. I want to help refresh memories by doing a single parameter and show you how it can be done with this function.

The first thing we need to do is create our form. This form allows us to use the dynamic parameters and gives us spots to pull data. This is more of a road map than anything else, but it is required for dynamics.

function Get-LapsPassword {

[cmdletbinding()]

Param()

DynamicParam {}

Begin {}

Process {}

End {}

}

The first part of our Dynamics is we want to name the parameter template. From there, we want to create a new object. This object will be the system collections object model for collections. AKA system attributes. Then we want to make an attribute object to add to that object later.

Building Out Objects

$paramTemplate = 'ComputerName'

$AttributeCollection = New-Object System.Collections.ObjectModel.Collection[System.Attribute]

$ParameterAttribute = New-Object System.Management.Automation.ParameterAttribute

The ParameterAttribute will be where we add the flags like mandatory and position. We add those by dropping them directly into the ParamterAttribute object. A fun little fact, you can tab through and see what other items are available for this object. Things like the help message, the value from the pipeline, and more are hidden here. Today we only care about the mandatory and position.

$ParameterAttribute.Mandatory = $true

$ParameterAttribute.Position = 1

After we build out our parameter Attribute object, we need to add it to the Attribute Collection we made at the start. We do this by using the “.add()” function of objects.

$AttributeCollection.Add($ParameterAttribute)

Now we need to create another object. This will be the Runtime Parameter Directory. Basically, what they will be looking through. This is a system management automation object called runtime defined parameter directory. Say that 10 times fast…

More Objects

$RuntimeParameterDictionary = New-Object System.Management.Automation.RuntimeDefinedParameterDictionary

Now we need to make our Validate Set. We will create an array of devices using the Get-adcomputer command. Here we will push (Get-adcomputer -filter {enabled -eq “true”}).name into a variable. Now we will have a list of active computers. Notice that we filter out all other information by using the “.name” call.

$ParameterValidateSet = (Get-ADComputer -Filter { enabled -eq "true" -and OperatingSystem -Like '*Windows*' -and OperatingSystem -notlike "*Server*" }).name

Next, we need to create another object. This object is the system management automation validate set attribute object. We can feed this object our Parameter Validate Set.

$ValidateSetAttribute = New-Object System.Management.Automation.ValidateSetAttribute($ParameterValidateSet)

Afterward, it’s time to feed the Validate Set attribute to the attribute collection from the beginning. We can accomplish this by using the “.add()” method.

$AttributeCollection.Add($ValidateSetAttribute)

Next, it’s time to bring our Attribute collection into the command line. It’s time to make the run-time parameter. Once again, a new object. This time it’s the Run time Defined Parameter object. Like the last object, we can place our data directly into it. We will want the parameter’s name, the type, a string in this case, and the validate set.

$RuntimeParameter = New-Object System.Management.Automation.RuntimeDefinedParameter($paramTemplate, [string], $AttributeCollection)

Afterward, we take the above parameter and place it into our directory with the “.add()” method. We need the parameter Template and the Run time Parameter.

$RuntimeParameterDictionary.Add($paramTemplate, $RuntimeParameter)

Finally, in the dynamic parameter block, we return our directory.

return $RuntimeParameterDictionary

Beginning

We are almost done. It’s time to bring the dynamic parameter into the function and make it useable. We do this in the beginning section. We shove the PSBoundParameters of our template name into a variable.

$MemberName = $PSBoundParameters[$paramTemplate]

Then from there, we call the $memberName in our Get-adcomputer command.

The Script

It’s that time, it’s time to put it all together, so you can copy and past it into your toolbox. It’s time To Grab LAPS Password With PowerShell.

function Get-LapsPassword {

[cmdletbinding()]

Param()

DynamicParam {

# Need dynamic parameters for Template, Storage, Project Type

# Set the dynamic parameters' name

$paramTemplate = 'ComputerName'

# Create the collection of attributes

$AttributeCollection = New-Object System.Collections.ObjectModel.Collection[System.Attribute]

# Create and set the parameters' attributes

$ParameterAttribute = New-Object System.Management.Automation.ParameterAttribute

$ParameterAttribute.Mandatory = $true

$ParameterAttribute.Position = 1

# Add the attributes to the attributes collection

$AttributeCollection.Add($ParameterAttribute)

# Create the dictionary

$RuntimeParameterDictionary = New-Object System.Management.Automation.RuntimeDefinedParameterDictionary

# Generate and set the ValidateSet

$ParameterValidateSet = (Get-ADComputer -Filter { enabled -eq "true" }).name

$ValidateSetAttribute = New-Object System.Management.Automation.ValidateSetAttribute($ParameterValidateSet)

# Add the ValidateSet to the attributes collection

$AttributeCollection.Add($ValidateSetAttribute)

# Create and return the dynamic parameter

$RuntimeParameter = New-Object System.Management.Automation.RuntimeDefinedParameter($paramTemplate, [string], $AttributeCollection)

$RuntimeParameterDictionary.Add($paramTemplate, $RuntimeParameter)

return $RuntimeParameterDictionary

} # end DynamicParam

BEGIN {

$MemberName = $PSBoundParameters[$paramTemplate]

} # end BEGIN

Process {

$ComputerInfo = Get-ADComputer -Filter { name -like $MemberName } -Properties *

}

End {

$ComputerInfo | select-object Name, @{l = "AdminPassword"; e = { $_."ms-Mcs-AdmPwd" } }, @{l = "AdminPasswordExpireTime"; e = { [datetime]::FromFileTime(($_."ms-Mcs-AdmPwdExpirationTime")) } }

}

}

Additional Reading

by David | Jan 6, 2023 | Help Desk, Information Technology, PowerShell

Reading Time: 4 minutes

Today I would like to go over how to get client information from your Unifi Controller. We will first connect to our Unifi Controller with Powershell using the Unifi API. Then from there we will pull each site and create a list of different sites. Finally, we will use the site information to pull the client information from the controller.

Powershell and API the Unifi Controller

The first step is to create a connection with our PowerShell. We will need a few items:

- User Account that Has at least read access

- The IP address of our controller

- The API port.

- An Unifi Controller that is configured correctly. (Most are now)

Ask your system administrator to create an account with read access. Check your configuration for the IP addresses and the Port numbers. By default, the API port is 8443. Now we have those pieces of information, its time to discuss what is needed.

Connecting to the API

To connect to the Unifi controller API, you need a Cookie. This is not like how we connected to the Graph API in our previous blog. To get this cookie, we will need to execute a post. The API URL is the IP address followed by the port number, API and login. Very standard.

$uri = "https://$($IPAddress):8443/api/login"

We will need to create a header that accepts jsons. To do this we simply say accept equal application/json.

$headers = @{'Accept' = 'application/json' }

Now we will create our Parameters for the body. Since this is going to be a Post command, we have to have a body. This is where we will place our Username and Password. We are going to build out a Hashtable and then convert that into a json since the API is expecting a json. We will use the convertto-json command to accomplish this step.

$params = @{

'username' = $username;

'password' = $Password;

}

$body = $params | ConvertTo-Json

Now we have our Body built out, it’s time to create a session. We will use the invoke-restmethod command to do this. We feed the URI we made a few steps back. The body will be the body we just built out. The content type will be ‘application/json’. Our headers will be the header we made a few steps back. We will skip the certificate check unless we are using a cert. Most of the time we are not. Finally, the secret sauce. We want a session. Thus, we use the Session Variable and give it a session name. Let’s go with S for the session.

$response = Invoke-RestMethod -Uri $uri `

-Body $body `

-Method Post `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-SessionVariable s

Grabbing Sites

Now we have our Session cookie, its’ time to grab some information. We will use the same headers but this time we will use the Session Name, S. The URL for sites is /api/Self/Sites.

$uri = "https://$($IPaddress):8443/api/self/sites"

This URL will get the sites from your Unifi Controller. Once again we will use the Invoke-RestMethod to pull the data down. This time we are using a get method. The same Content type and headers will be used. Along with the skip certificate check. The Session Variable is going to be the Web Session and we will use the $S this time.

$sites = Invoke-RestMethod -Uri $uri `

-Method Get `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-Websession $s

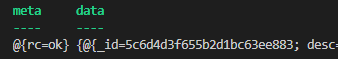

The Sites variable will contain two items. a meta and a data variable. Everything we will need is inside the Data variable. To access it we can use the $sites.data to see all the data.

Here is an example of what the data will look like for the sites.

_id : 5a604378614b1b0c6c3ef9a0

desc : A descriptoin

name : Ziipk5tAk

anonymous_id : 25aa6d32-a165-c1d5-73a0-ace01b433c14

role : admin

Get Client Information from your Unifi Controller

Now that we have the sites, we can go one at a time and pull all the clients from that site. We will do this using a Foreach loop. There is something I want to point out first. Remember, the data that is returned is two variables, two arrays. The first one is Meta. Meta is the metadata of the session itself. It’s something we don’t need. We do need the Data. For our foreach loop, we will pull directly from the data array. We want to push everything in this loop into a variable. This way we can parse out useful data, sometime to much data is pointless.

$Clients = Foreach ($Site in $Sites.Data) {

#Do something

}

Without using the $Sites.Data we will have to pull the data from the command itself. This can cause issues later if you want to do more complex things.

The URL we will be using is the /api/s/{site name}/stat/sta. We will be replacing the Site name with our $site.name variable and pushing all that into another Uri.

$Uri = "https://$($IPaddress):8443/api/s/$($Site.name)/stat/sta"

Then we will execute another invoke-restmethod. Same as before, we use the same headers, content type, and web session. The only difference is we wrap up the command inside preferences. This way we can pull the data directly while the command executes. Less code that way.

(Invoke-RestMethod -Uri $Uri `

-Method Get `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-Websession $s).data

Each time the command runs, it will release the data that we need and that will all drop into the $Clients variable. From here, we pull the information we want. The information that the client’s produce includes, the match addresses, times, IP addresses, possible names, IDs and more. So, it’s up to you at this point to pick and choose what you want.

The Script

The final script is different because I wanted to add site names and data to each output. But here is how I built it out. I hope you enjoy it.

function Get-UnifiSitesClients {

param (

[string]$IPaddress,

[string]$username,

[string]$portNumber,

[string]$Password

)

$uri = "https://$($IPaddress):$portNumber/api/login"

$headers = @{'Accept' = 'application/json' }

$params = @{

'username' = $username;

'password' = $Password;

}

$body = $params | ConvertTo-Json

$response = Invoke-RestMethod -Uri $uri `

-Body $body `

-Method Post `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-SessionVariable s

$uri = "https://$($IPaddress):$portNumber/api/self/sites"

$sites = Invoke-RestMethod -Uri $uri `

-Method Get `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-Websession $s

$Return = Foreach ($Site in $sites.data) {

$Uri = "https://$($IPaddress):$portNumber/api/s/$($Site.name)/stat/sta"

$Clients = (Invoke-RestMethod -Uri $Uri `

-Method Get `

-ContentType 'application/json' `

-Headers $headers `

-SkipCertificateCheck `

-Websession $s).data

Foreach ($Client in $Clients) {

[pscustomobject][ordered]@{

Site = $Site.name

SiteDescritption = $Site.desc

OUI = $client.OUI

MacAddress = $client.mac

IPAddress = $Client.IP

SwitchMac = $client.sw_mac

SwitchPort = $client.sw_port

WireRate = $client.wired_rate_mbps

}

}

}

$return

}

Additional Resource

by David | Nov 11, 2022 | Information Technology, PowerShell

Reading Time: 3 minutes

Regex… Even the PowerShell Masters struggle with it from time to time. I helped a friend of mine with some URL chaos. The URL he had was a software download and the token was inside the URL. Yeah, it was weird but totally worth it. There were many different ways to handle this one. But the Matches was the best way to go. What was interesting about this interaction was I could later in another script. Unlike my other posts, this one’s format is going to be a little different. Just following along.

The URL (Example)

"https://.download.software.net/windows/64/Company_Software_TKN_0w6xBqqzwvw3PWkY87Tg301LTa2zRuPo09iBxamALBfs512rSgomfRROaohiwgJx9YH7bl9k72YwJ_riGzzD3wEFfXQ7jFZyi5USZfLtje2H68w/MSI/installer"

Here is the string example we are working with. Inside the software installer, we have the name of the software, “Company_Software_” and the token, “0w6xBqqzwvw3PWkY87Tg301LTa2zRuPo09iBxamALBfs512rSgomfRROaohiwgJx9YH7bl9k72YwJ_riGzzD3wEFfXQ7jFZyi5USZfLtje2H68w” The question is how do you extract the token from this URL? Regex’s Matches we summon you!

Matches is one of the regex’s powerhouse tools. It’s a simple method that allows us to search a string for a match of our Regex. This will allow us to pull the token from URLs with PowerShell. First, it’s best to link to useful documentation. Here is the official Microsoft documentation. Sometimes it’s helpful. Another very useful tool can be found here.

PowerShell’s Regex can be expressed in many different ways. The clearest and most concise way is to use the -match flag.

This of course produces a true or false statement. Then if you call the $matches variable, you will see all of the matches. Another way of doing this is by using the type method.

[regex]::Matches($String, $Regex)

This method is the same as above, but sometimes it makes more sense when dealing with complex items. The types method is the closest to the “.net” framework.

The Regex

Next, let’s take a look at the Regex itself. We are wanting to pull everything between TKN_ and the next /. This was a fun one.

The first part is the token. We want our search to start at _TKN_. This clears out all of the following information automatically: https://.download.software.net/windows/64/Company_Software. A next part is a group. Notice the (). This creates a group for us to work with. Inside this group, we are searching for all the characters inside []. We are looking for Everything starting at where we are, the TKN_ to a matching /. We want all the information so we place a greedy little +. This selects our token. This regex produces two matches. One with the word _TKN_ and one without. Thus we will want to capture the second output, aka index of 1.

$String -match '_TKN_([^/]+)'

$Matches[1]

Another way to go about this is the method-type model.

$Token = [regex]::Matches($String, '_TKN_([^/]+)') | ForEach-Object { $_.Groups[1].Value }

It same concept, instead this time we are able to parse the output and grab from group one.

Replace Method

Another way to go about this is by using replace. This method is much easier to read for those without experience in regex. I always suggest making your scripts readable to yourself in 6 months. Breaking up the string using replace makes sense in this light. The first part of the string we want to pull out is everything before the _TKN_ and the TKN itself. The second part is everything after the /. Thus, we will be using two replaces for this process.

$String -replace(".*TKN_",'')

Here we are removing everything before and the TKN_ itself. The .* ensures this. Then we wrap this code up and run another replace.

$Token = ($String -replace(".*TKN_",'')) -replace('/.*','')

Now we have our token. This method is easier to follow.

Conclusion

In Conclusion, parsing URLs With PowerShell can be tough, but once you get a hang of it, life gets easier. Use the tools given to help understand what’s going on.

Additional Regex Posts

Image created with midjourney AI.

by David | Nov 4, 2022 | Group Policy, Information Technology, PowerShell

Reading Time: 2 minutes

Documentation is a big deal in the world of IT. There are different levels of documentation. I want to go over in-place documentation for group policy. Comments in Group Policy are in-place documentation.

How to comment on a Group Policy

This process is not straightforward by any stretch of the imagination. The first and foremost way to add comments to a Group Policy is to use the GUI.

- Open Group Policy Management Console

- Select the policy you wish to comment

- Right-click the policy in question and click edit

- Inside the group policy management editor, right-click the policy name and click properties

- Click the comment tab

- Now enter your comment.

- click Apply and Ok

The second way to add a comment in group policy is by using PowerShell. The Description of a policy is where the comment lives. Thus using the command Get-GPO will produce the comment. We will dig more into that later.

Get-GPO -name "Control Panel Access"

Using the Get-Member command we can pipe our Get-GPO command and see what is doable. You will be treated to a list of member types and what they are capable of. The description has a get and a set method to it. This means, you can set the description, aka comment.

(Get-GPO -name "Control Panel Access").Description = "This is my comment"

Suggestions

Here are a few suggestions for documenting the policy like this.

- Use the(Get-date).ToString(“yyyy-MM-dd_hh_mm_ss”) at the beginning to setup your date time.

- Then, I would add the author of the policy/comment

- A quick description of the policy

- Whether it’s a user or computer policy.

- Any WMI filters.

More information here helps the next person or even yourself months down the road. Don’t go overboard as it can cause issues later. Using the ‘`n’ will create a new line which can be helpful as well.

Pulling Comments with PowerShell

Now that we have all the policies documented, we can pull the information from the in-place documentation. We do this by using the GPO-Get -All command. One way to do this is by using the select-object command and passing everything into a csv. I personally don’t like that, but it works.

Get-GPO -All | select-object DisplayName,Description | export-csv c:\temp\GPO.csv

I personally like going through the GPO’s and creating a txt file with the comment and the file name being the display name.

Get-GPO -All | foreach-object {$_.Description > "c:\temp\$($_.Displayname).txt"}

Conclusion

I would like to go deeper into In-Place Documentation as it is very useful down the road. Powershell uses the #, other programs use different methods as well. If you get a chance to place in place documentation, life becomes easier when you are building out the primary documentation as you have a point of reference to look back at.

Future Reading

by David | Oct 28, 2022 | Deployments, Information Technology, PowerShell

Reading Time: 3 minutes

There has been a few times where I have needed to enable Remote Desktop Protocal on Remote computers. So, I built out a simple but powerful tool to help me with just this. It uses the Invoke-Command command to enable the RDP. So, lets dig in.

The Script – Enable RDP on a Remote Computer

function Enable-SHDComputerRDP {

<#

.SYNOPSIS

Enables target computer's RDP

.DESCRIPTION

Enables taget Computer's RDP

.PARAMETER Computername

[String[]] - Target Computers you wish to enable RDP on.

.PARAMETER Credential

Optional credentials switch that allows you to use another credential.

.EXAMPLE

Enable-SHDComputerRDP -computername <computer1>,<computer2> -Credential (Get-credential)

Enables RDP on computer1 and on computer 2 using the supplied credentials.

.EXAMPLE

Enable-SHDComputerRDP -computername <computer1>,<computer2>

Enables RDP on computer1 and on computer 2 using the current credentials.

.OUTPUTS

[None]

.NOTES

Author: David Bolding

.LINK

https://therandomadmin.com

#>

[cmdletbinding()]

param (

[Parameter(

ValueFromPipeline = $True,

ValueFromPipelineByPropertyName = $True,

HelpMessage = "Provide the target hostname",

Mandatory = $true)][Alias('Hostname', 'cn')][String[]]$Computername,

[Parameter(HelpMessage = "Allows for custom Credential.")][System.Management.Automation.PSCredential]$Credential

)

$parameters = @{

ComputerName = $ComputerName

ScriptBlock = {

Enable-NetFirewallRule -DisplayGroup 'Remote Desktop'

Set-ItemProperty ‘HKLM:\SYSTEM\CurrentControlSet\Control\Terminal Server\‘ -Name “fDenyTSConnections” -Value 0

Set-ItemProperty ‘HKLM:\SYSTEM\CurrentControlSet\Control\Terminal Server\WinStations\RDP-Tcp\‘ -Name “UserAuthentication” -Value 1

}

}

if ($PSBoundParameters.ContainsKey('Credential')) { $parameters += @{Credential = $Credential } }

Invoke-Command @parameters

}

The breakdown

Comments/Documentation

The first part of this tool is the in-house Documentation. Here is where you can give an overview, description, parameters, examples, and more. Using the command Get-help will produce the needed information above. On a personal level, I like adding the type inside the parameters. I also like putting the author and date inside the Notes field.

Parameters

We are using two parameters. A computer name parameter and a credential parameter. The ComputerName parameter contains a few parameter flags. The first is the Value from Pipeline flags. This allows us to pipe data to the function. The next is the Value From Pipeline by Property name. This allows us to pass the “ComputerName” Value. Thus we can pull from an excel spreadsheet or a list of computer names. Next, we have the Help Message which is just like it sounds. It’s a small help message that can be useful to the end user. Finally, we have the Mandatory flag. As this command is dependent on that input, we need to make this mandatory. The next item in computername is the Alias. This allows us to use other names. In this example, we are using the hostname or the CN. This is just a little something that helps the end user. Finally, we have the type. This is a list of strings which means we can target more than one computer at a time.

The next parameter is the Credential Parameter. This one is unique. The only flag we have here is the Hel message. The type is a little different. The type is a System Management Automation PSCredential. And yes, it’s complex. A simple run down is, use Get-Credentials here. This function is designed to be automated with this feature. If you are using a domain admin account, you may not need to use this. However, if you are working on computers in a different domain, and don’t have rights, you can trigger this parameter.

param (

[Parameter(

ValueFromPipeline = $True,

ValueFromPipelineByPropertyName = $True,

HelpMessage = "Provide the target hostname",

Mandatory = $true)][Alias('Hostname', 'cn')][String[]]$Computername,

[Parameter(HelpMessage = "Allows for custom Credential.")][System.Management.Automation.PSCredential]$Credential

)

The Script Block

Now we need to create the script block that will be used inside the invoke-command. We are going to build out a splat. We build splats with @{}. The Information will be inside here. When we push a splat into a command we need to add each flag from that command that is required. Here we are going to be adding the computer name and script block. The computer name flag is a list of strings for our invoke-command. Thus, we can drop the Computername into the ComputerName. Yeah, that’s not confusing. The script block is where the action is.

$parameters = @{

ComputerName = $ComputerName

ScriptBlock = {

#Do something

}

}

Let’s open up some knowledge. The first thing we need to do is enable the remote desktop firewall rules. This will allow the remote desktop through the firewall.

Enable-NetFirewallRule -DisplayGroup 'Remote Desktop'

Next, we need to add the registry keys. The first key is to disable the deny TS connection keys. Next, we need to enable the User Authentication key.

Set-ItemProperty ‘HKLM:\SYSTEM\CurrentControlSet\Control\Terminal Server\‘ -Name “fDenyTSConnections” -Value 0

Set-ItemProperty ‘HKLM:\SYSTEM\CurrentControlSet\Control\Terminal Server\WinStations\RDP-Tcp\‘ -Name “UserAuthentication” -Value 1

Adding Credentials

Now we have created the Parameter, it’s time to add credentials when needed. We do this by asking if the parameter credentials was added. This is done through the PSBoundParameters variable. We search the Contains Key method and to see if Credential is set. If it is, Then we add the credentials.

if ($PSBoundParameters.ContainsKey('Credential')) { $parameters += @{Credential = $Credential } }

Finally, we invoke the command. We are using the splat which is the @parameters variable instead of the $parameter.

Invoke-Command @parameters

And that’s how you can quickly Enable RDP on a Remote Computer using PowerShell within your domain.

Additional Reading

by David | Oct 21, 2022 | Help Desk, Information Technology, PowerShell

Reading Time: 2 minutes

During my in-house days, one of the things I had to do constantly was clear people’s print jobs. So I learned to Clear Print Jobs with Powershell to make my life easier. It surely did. With PowerShell I could remotely clear the print jobs as most of my machines were on the primary domain. All you need to know was the server and the printer’s name. The server could be the computer in question if it’s a local printer. Or it could be the print server. Wherever the queue is being held.

The Script

function Clear-SHDPrintJobs {

[cmdletbinding()]

param (

[parameter(HelpMessage = "Target Printer", Mandatory = $true)][alias('Name', 'Printername')][String[]]$name,

[parameter(HelpMessage = "Computer with the printer attached.", Mandatory = $true)][alias('Computername', 'Computer')][string[]]$PrintServer

)

foreach ($Print in $PrintServer) {

foreach ($N in $name) {

$Printers = Get-Printer -ComputerName $Print -Name "*$N*"

Foreach ($Printer in $Printers) {

$Printer | Get-PrintJob | Remove-PrintJob

}

}

}

}

The Breakdown

It’s time to break down this code. The parameters are set to mandatory. As you need this information. Notice, both parameters are lists of strings. This means you can have the same printer name on multiple printers and trigger this command.

Parameters

We are using two parameters. The first is the name of the Printer. This parameter is a mandatory parameter. We are using the alias switch here as well. Basically, this switch gives different options to make the command easier to work with. The options are, Name and Printername. It is also a list of strings. This way we can import an array if need be. I’ll go over why that’s important later. Finally, we have a help message. This can be useful for the user to figure out what they need to do.

[parameter(HelpMessage = "Target Printer", Mandatory = $true)][alias('Name', 'Printername')][String[]]$name

The next parameter is like the first. It is a Mandatory parameter with alias options. The options are “ComputerName” and “Computer”. I set the name as “PrintServer” because I dealt with print servers most of the time. Once again we have a list of strings for multiple machines.

Foreach Loops

Next, we look at our function. This is where we Clear Print Jobs with PowerShell. There are three loops in total. The first foreach loop cycles through the print server list. So for each print server, we enter the next loop. The next loop consists of the Printer Names.

foreach ($Print in $PrintServer) {

foreach ($N in $name) {

#Do something

}

}

Inside the Name loop, we use the get-printer command. We target the print server and ask for a printer that contains the name we requested. Thus, if you use a *, you can clear out all the print jobs from that device. This is a very powerful option.

$Printers = Get-Printer -ComputerName $Print -Name "*$N*"

After gathering the printers from the server, we start another loop. This loop will be foreach printer we have from this server. We pipe the output from the Printers to Get-PrintJob. This allows us to see the print jobs. Then we pipe that information into Remove-PrintJob. This clears the print job.

$Printer | Get-PrintJob | Remove-PrintJob

That’s it for this function. It’s a great little tool that will change how you clear print jobs of buggy print systems.

Conclusion

In conclusion, I have used this function a few hundred times in my day. The environment was the domain level. This does not work for cloud print options.

Additional reading

Images made by MidJourney AI